Why Scaling Is Not the Answer to Everything

Equally important is the availability and quality of (human-generated) data

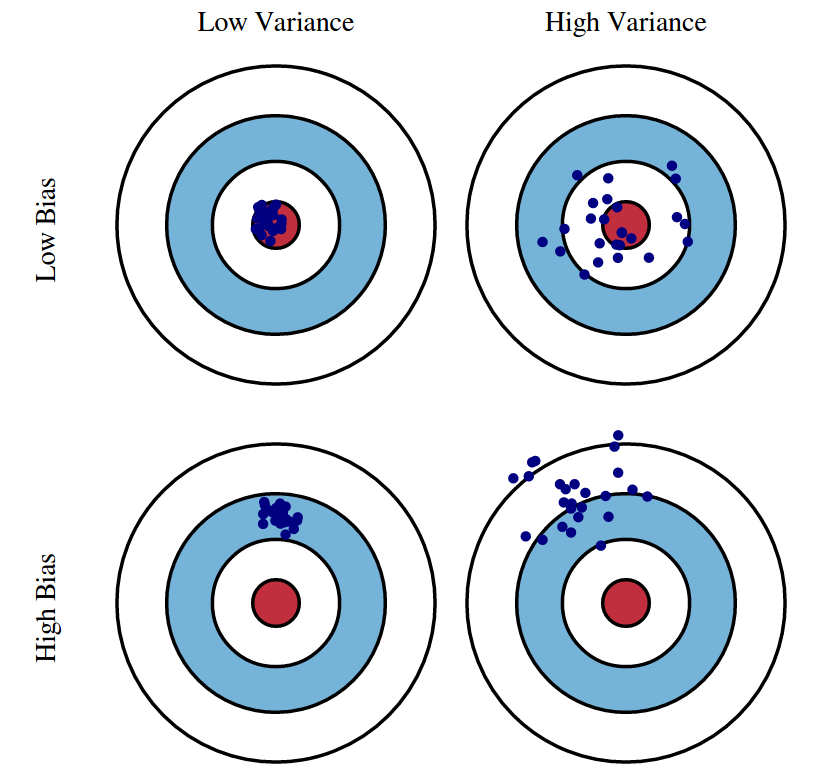

There was a time when “after a certain point, bigger models get worse” was common sense in ML. It made sense. A model should be complex enough to capture patterns but not so complex that it ends up memorizing them instead. Yes, I’m talking about the bias-variance trade-off. The bias-variance trade-off implies that a model should balance under-fitting and over-fitting: rich enough to express underlying structure in the data but simple enough to avoid fitting spurious patterns1.

Let’s break it down2:

Bias is the inherent error you obtain from your classifier, even with infinite training data. This is because your classifier is biased to a particular kind of solution (e.g. linear classifier). Randomness in the underlying data could be another reason for a biased classifier.

Variance captures how much your classifier changes if you train on a different training set. How over-specialized is your classifier to a particular training set (overfitting)? If we have the best possible model for our training data, how far off are we from the average classifier?

Noise is the unavoidable randomness in the data itself. How big is this data-intrinsic noise? This error measures ambiguity due to your data distribution and feature representation.

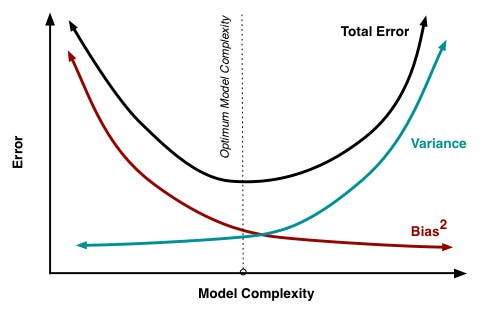

Traditional thinking is about finding the sweet spot between underfitting and overfitting, as illustrated in Figure 2. When this balance is achieved, the classic U-shaped curve emerges. For decades, this was the rule of thumb. But Deep Neural Networks had other plans.

At first, everything followed the expected pattern: smaller models underfit and larger models start overfitting.

But then, something surprising emerged. Researchers discovered that if you keep scaling the model beyond this point, test error peaks and starts decreasing. This is Deep Double Descent, a phenomenon first observed by Belkin et al. and later explored by Nakkiran et al.3 What Nakkiran et al. found is that deep learning models have two distinct regimes:

Under-parameterized regime: When model complexity is small relative to the dataset size, test error follows the classic U-shaped curve predicted by the bias-variance tradeoff.

Over-parameterized regime: When models become sufficiently large to interpolate the training data (i.e., achieve near-zero training error), test error starts decreasing again.

This doesn’t mean that the bias-variance tradeoff no longer applies. Instead, it shows that as models scale, the relationship between complexity and generalization shifts in ways that conventional wisdom didn’t anticipate.

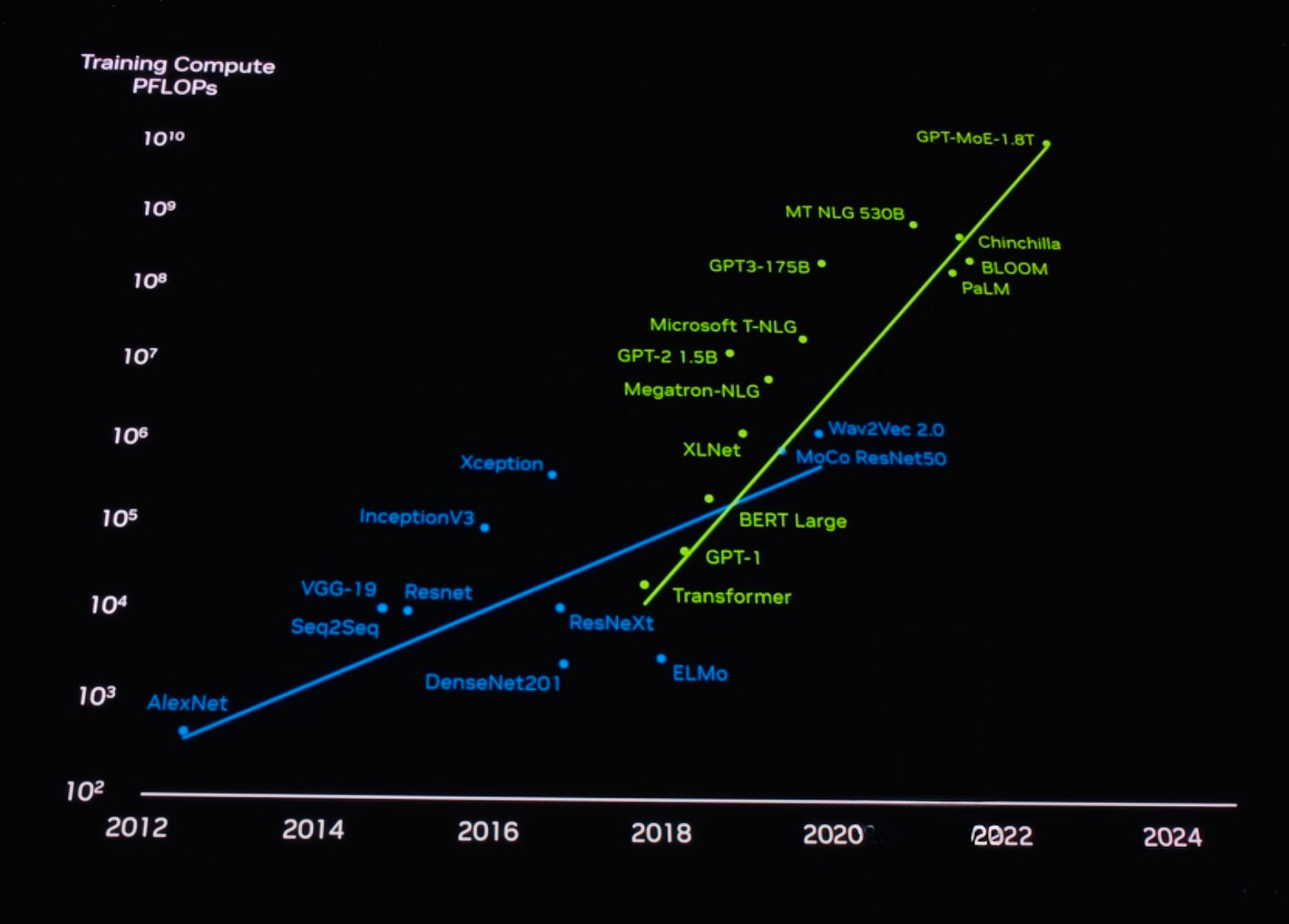

Based on the findings of Scaling Laws for Neural Language Models4, model performance depends most strongly on scale, which consists of three factors: the number of model parameters (excluding embeddings), dataset size, and compute power used for training. So, the new playbook is bigger models, more data, and longer training time. The numbers prove it: Figure 3 shows how things change on the training compute side. Between 2012 and 2023, training compute increased significantly. In 2012, AlexNet used compute in the range of 10³ PFLOPs, but today’s models, like GPT-MoE-1.8T, push the limits with a seven-order magnitude jump. Moreover, in the LLM era, scaling has accelerated significantly in a relatively short amount of time as illustrated by the green line. It’s a clear contrast compared to the pre-transformer era shown by the blue line.

But scaling isn’t just about training time, it’s also about model size. GPT-1 had 117 million parameters with a 512-token size. GPT-2 scaled up 10x to 1.8 billion parameters, doubling its token limit to 1024. GPT-3 followed this trend and got 100x larger than its predecessor at 175 billion parameters, with another doubling of context size. GPT-4 reportedly jumped another 10x to an estimated 1.8 trillion parameters.

With each leap in scale, models score higher, perform better, and set new benchmarks. But what exactly does better mean?

Better at learning? Better at thinking? Better at reasoning? Or just better at memorizing? The truth is that LLMs succeed on benchmarks by retrieving known answers rather than reasoning on the fly.

This does not mean they are useless. As I mentioned in Why Today’s Agents Are Only Slightly Agentic, “they are still valuable for automating repetitive tasks and shaping the next generations of AI systems.” But if scaling keeps working (while models are not actually thinking), what does limit scaling?

Well, data limits scaling. While much recent progress has focused on training increasingly larger models, research suggests that scaling dataset size must be prioritized just as much. Hence, the biggest roadblock to scaling AI is not just the scarcity of human-produced data, but also the quality of the available data.56

To sustain scaling, companies are turning to synthetic data. But how? The most obvious approach to get around the finite amount of human-produced data is data augmentation, which refers to generating variations of existing data to artificially expand the dataset. This isn’t new; AlexNet was already using augmentation on images to reduce overfitting. Another approach is to forgo using human data altogether, i.e. self-play learning, which is when a model teaches itself through competition. For instance, AlphaGo Zero7 surpassed human-level play entirely through self-play, ultimately defeating its predecessor AlphaGo.

However, the lack of high-quality data is more challenging to address given the multi-dimensional nature of the problem. The Internet holds hundreds of trillions of tokens including many that are repetitive, biased (can be explicit or subtle), privacy-sensitive, or shaped by search engine optimization. If that’s not concerning enough already, future models may even be trained on AI-generated content, further reinforcing the existing scarcity and quality problem.

The more AI models train on data produced by their predecessors, the faster they degrade8. This phenomenon is known as model collapse, where models forget the true underlying data distribution over time. The data they generate end up polluting the training set of the next generation. Being trained on polluted data, they then misperceive reality.

Ultimately, what I’ve realized while writing is that many debates about scaling boil down to a deeper, more fundamental question: What are our expectations from LLMs? If we’re looking for tools with a vast memory that can accurately retrieve information, today’s models already deliver. But if we want them to reason or understand, just making them bigger won’t get us there. I agree with Meta’s Chief Scientist, Yann LeCun, aka the creator of LeNet, that we’re not going to get to human-level AI by just scaling up LLMs. Not when they rely on biased, repetitive, or even AI-generated data. In this rapidly changing environment, we shouldn’t overlook some of the bigger questions. What about our collective responsibility to ensure high-quality datasets rather than indiscriminately scraping the Internet? And what happens if we ignore model collapse, especially as synthetic data becomes more prevalent in training datasets?

Belkin, Mikhail, et al. “Reconciling modern machine-learning practice and the classical bias–variance trade-off.” Proceedings of the National Academy of Sciences, 116.32 (2019): 15849-15854.

Cornell University, “Intro to Machine Learning.” https://www.cs.cornell.edu/courses/cs4780/2018fa/lectures/lecturenote12.html.

Nakkiran, Preetum, et al. “Deep double descent: Where bigger models and more data hurt.” Journal of Statistical Mechanics: Theory and Experiment, 2021.12 (2021): 124003.

Kaplan, Jared, et al. “Scaling laws for neural language models.” arXiv preprint arXiv:2001.08361 (2020).

Hoffmann, Jordan, et al. “Training compute-optimal large language models.” arXiv preprint arXiv:2203.15556 (2022).

In his interview with Lex Fridman in 2024.

Shumailov, Ilia, et al. “AI models collapse when trained on recursively generated data.” Nature 631.8022 (2024): 755-759.